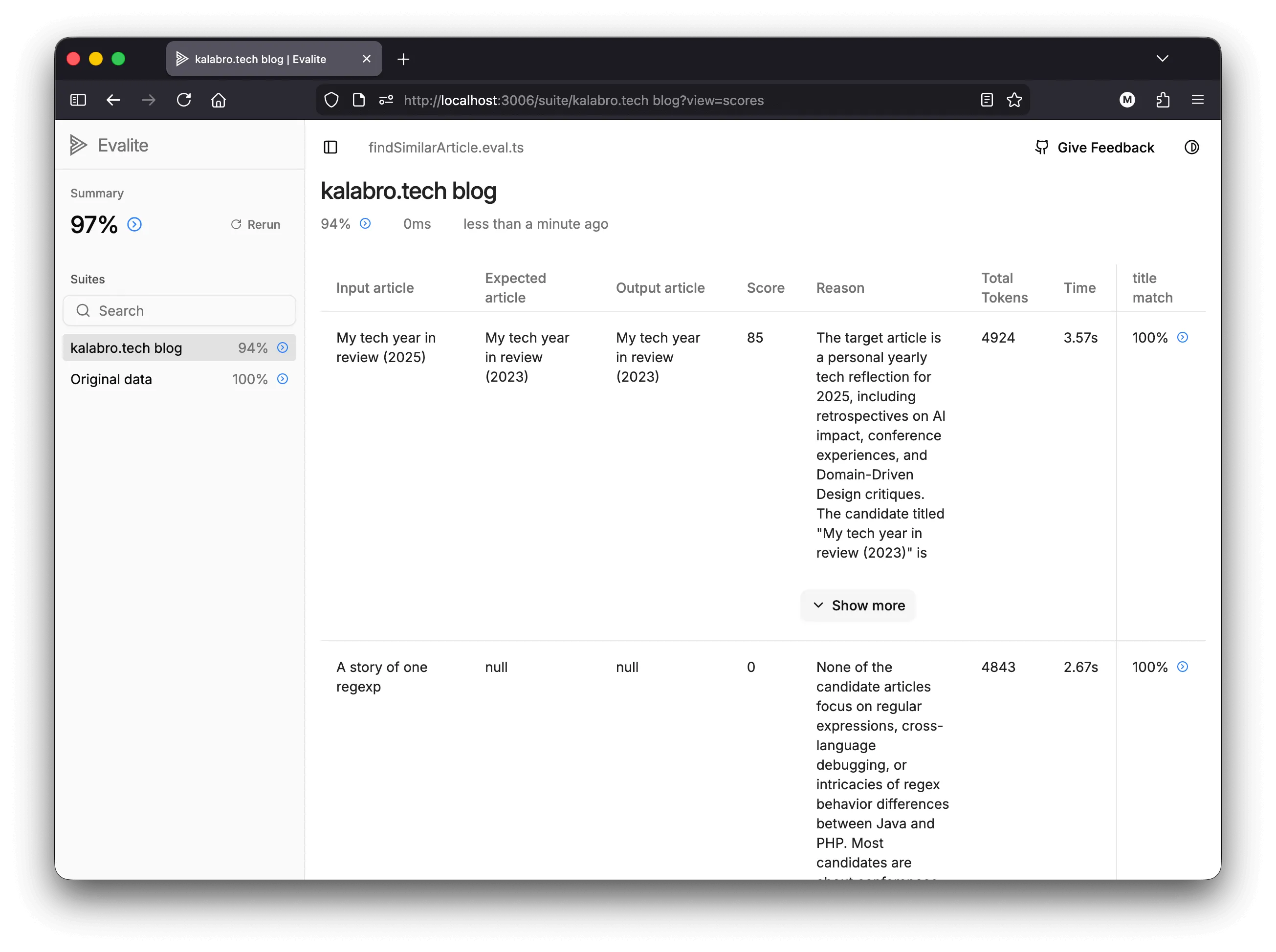

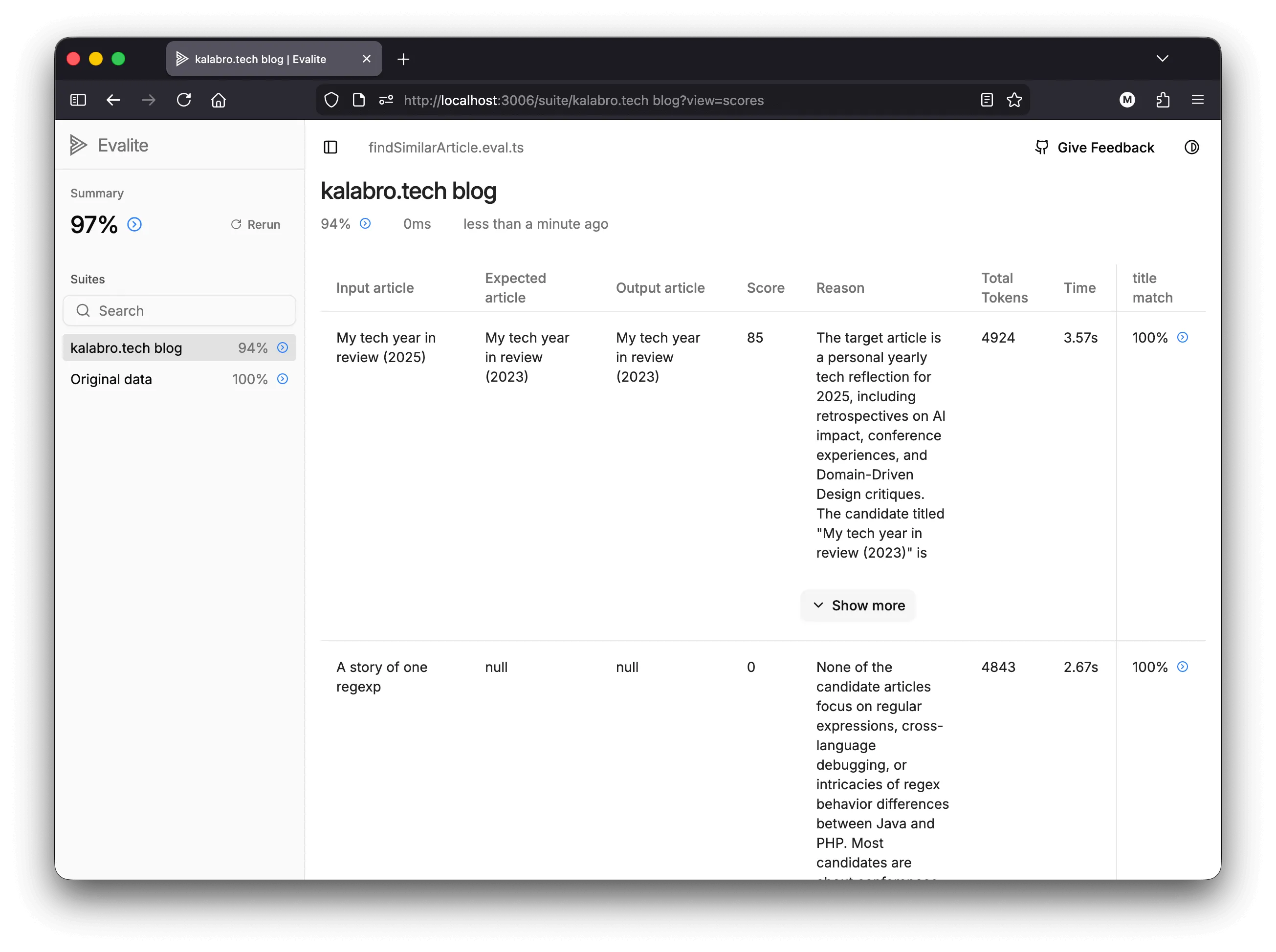

Evalite by Matt Pocock is a library for testing AI-powered apps on your local.

AI, conferences, and DDD rants to end the year

Overview of the new Balancing Coupling model with examples and interactive quizzes. Enjoy!

My highlights from the first in the series of books on software design by Kent Beck

Thinking Architecturally by Nate Schutta is a short practical book for developers who want to stay current and grow in the rapidly changing tech industry. My favourite part is about “ilities”. Read on to learn more.

My review of “Learning Domain-Driven Design” book about DDD and how it maps to the common software architecture patterns.

Evalite by Matt Pocock is a library for testing AI-powered apps on your local.

Previous weeks are available in the archive.